|

A book by William H. Calvin UNIVERSITY OF WASHINGTON SEATTLE, WASHINGTON 98195-1800 USA |

|

THE CEREBRAL CODE Thinking a Thought in the Mosaics of the Mind Available from MIT Press and amazon.com. copyright 1996 by William H. Calvin |

|

Chimes on the Quarter Hour

We have an urge, almost a compulsion, to finish a well-known sequence. Recall how a crying child can be distracted, by singing a familiar nursery rhyme and then prompting the child to fill in the last word of the line. This is so compulsive a response that it often overrides the child’s crying and eventually stops it. We create sequences when we speak a sentence that we’ve never spoken before, or improvise at jazz, or plan a career. We invent dance steps. Even as four-year-olds, we can play roles, achieving a level of abstraction not seen in even the smartest apes. Many of our beyond-the-apes behaviors involve novel strings of behaviors, often compounded: phonemes chunked into words, words into word phrases, and (as in this paragraph) word phrases into complicated sentences with nested ideas. Rules for made-up games illustrate the memory aspect of this novelty: we must judge possible moves against serial-order rules, for example, in solitaire where you must alternate colors as you place cards in descending order. Preschool children will even make up such arbitrary rules, and then judge possible actions against them. We abandon many of the possible moves that we consider in a card game, once we check them out against our serial-order memories of the rules. In shaping up a novel sentence to speak, we are checking our candidate word strings against overlearned ordering rules that we call syntax and grammar. We even memorize unique sequential episodes without intending to do so: when you try to remember where you lost your keys, the various places that you visited since you last used a key can often be recalled.

Narrative is one of the ways in which we speak, one of the large categories in which we think. Plot is its thread of design and its active shaping force, the product of our refusal to allow temporality to be meaningless, our stubborn insistence on making meaning in the world and in our lives.

|

|

There is a great deal of information hidden in the overall flow

of even nonsense words, presumably the reason why we

appreciate Charles Dodgson’s youthful poem, “’Twas brillig, and

the slithy toves/Did gyre and gimble in the wabe; All mimsy were

the borogoves/ And the mome raths outgrabe.” Sequential form alone, with even less hint of meaning, can be sufficient to bring forward a complete phrase from memory. Sometimes we can do this from what initially appears totally incomprehensible (I am indebted to Dan Dennett for this lovely example of just-sufficient disguise).

Now even if you only know a few words of this language, and have never seen the faded, centuries-old font and the spellings of the time, you can probably recognize this quotation within a few minutes. That is, you can recognize that it’s familiar but you can’t recall it in detail without a lot more effort. But once you do recall it, and its short-form name, X, it will become so obvious that it will be difficult to look back and see it as anything other than X (that’s because your X attractor has been activated, firmly capturing variants).

In trying to comprehend a sentence, we often seek out missing information because of encountering a word that must connect with certain other portions of the string. I discuss this in chapter 5 of How Brains Think as part of linguistic argument structure: a verb such as give requires three nouns to fill the roles of actor, recipient, and object given. When you encounter give, you go searching for all three nouns or noun phrases. One now sees billboard advertising slogans that read Give him. We easily infer “you” as the implied actor but the still-incomplete sentence sends us off on a compulsive search for the object to be given (this is a technique to make the ad more memorable by increasing dwell duration). Can hexagonal cloning competitions help us appreciate the underpinnings of these abilities to string things together? And the search for the missing segment?

|

|

State machines are the traditional model for sequencing and

stage setting. The Barcelona subway, for example, has ticket

machines that require me to first select the ticket type, then the

number of passengers, then deposit the coins, then retrieve the

ticket. The successful completion of one state causes the machine

to advance to its next state (though it times out if I spend too

much time fumbling for the correct coins). Barcelona even has an

automata museum devoted to nineteenth-century state machines

and robots. State machines are often easy to build but difficult to

operate intuitively (just think of programming your videotape

recorder). Might switching gaits of locomotion involve a state machine? Note that jog or lope is not required as an intermediate gait between walk and run; the system can make transitions in various orders, suggesting that it is not a simple state machine. Still, stage-setting remains an important possibility for advancing a chain of thought or action. Chimes on the quarter hour were easily heard from my hotel balcony in Barcelona, the local church reminding me that time flies — and that I write too slowly. Such chimes are the most familiar example of combining spatiotemporal pattern and state machine. American grandfather clocks, such as the one my father once constructed, use a slightly different tune on the three-quarter hour. I have persuaded my mother to write out the corresponding musical notes that adorn this chapter (I can read music but not write it down, another example of recall being more difficult than recognition, of production being more difficult than understanding).

|

|

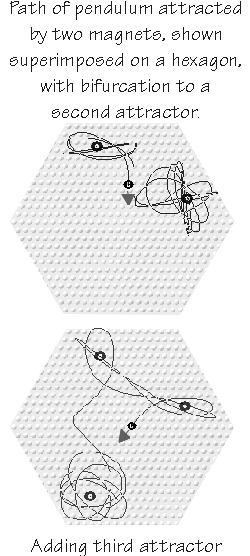

Spatiotemporal patterns within the hexagon are, so far, my

model for an activated cerebral code — not only for objects and

events but for composites such as categories. For the stored code,

it’s the spatial-only patterning of synaptic connections that give

rise to basins of attraction. Resurrection of a complex spatiotemporal pattern might involve changing from one attractor to

another. Adding on another attractor might, on the chaotic

itinerancy model, be like adding another city to the traveling

salesman’s route.

But because some of the things we memorize are themselves extended in time, we have to consider whether the category code is strung out in distinct spatiotemporal units, like the frames of movie film. We involuntarily memorize brief episodes (though not very accurately). On the movement side, we produce motor outputs that chain together unitary actions, as when we unlock a door or dial a telephone. Asleep, we create narratives with improbable qualities that are often signaled by nonsensical segues and juxtapositions (nonviable chimeras, as it were). Is the sequence’s representation simply a chain of elementary spatiotemporal patterns, skillfully segued like a medley of songs? Or is neural sequencing more complicated, like those mental models that intervene when recalling a text? Certainly phonemics suggests some obligatory stage-setting (one reason that mechanical text-to-speech conversion is complicated is that look-ahead is required: some phonemes are modified when followed by certain other ones, so a planning buffer is needed). Just as in biology, there are two levels of mechanism to consider here: active firing and those passive bumps and ruts in the road. Active firing, as we have seen, is especially important for cloning spatiotemporal patterns; the territory attained may be important both locally and in seeding the faux fax distant version. And, of course, it may modify the connectivity beneath it. But how does a sequence get started from the passive connectivity and elaborate itself into extended lines of “music”? Is this the activation of a multilobed attractor within a single hexagon, or a circuit rider visiting different hexagons, each of whom contribute a segment? Like the recognition-recall problem, we may have another multi-level solution — a hash could serve as a fingerprint for a full text, or a loose-fitting, centrally-located abstract could have links to outlying details. In the manner of the multimodality funnel for comb, recall might be a matter of links but with activation in a certain order contributed by a multilobed attractor in the temporal equivalent of a convergence zone, acting much like the orchestral conductor in adjusting startup times.

|

|

One clue to sequence representation is that we are often able

to decompose them at will, for both sensory and movement

sequences. We easily discard the first three words of “Repeat after

me — I swear to tell. . . .” when replying. Compare that to the rat

that thinks life is more complicated than it really is, circling three

times before pressing the bar in the Skinner box, simply because

that’s what he happened to have done prior to being rewarded for

some prior bar press (shaping rats out of superstitious behavior is

an important bit of customization of experiments to individual

subjects). We can discard the inefficient parts of an exploratory sequence when repeating it, just as speakers can often paraphrase long questions from the audience before answering them. Indeed, our memories seem organized this way: readers tend to remember the mental model they constructed from a text, rather than the text itself, and such abstraction probably happens as well for “film-clip experiences,” such as being an eyewitness to an accident. Some complicated sequences can, with enough practice, become securely enough embedded to survive major insults to the brain, for example, the aphasic patient who can sing the national anthem, even though he cannot speak a nonroutine sentence, one that requires customizing a sequence before actually speaking. It’s not that different from what happens in all of us, normally. A dart throw or basketball free throw, where the object is to do the movement exactly the same each time by getting “in the groove” appropriate to the standard distance and standard projectile, may be secure — at least, when compared to throwing at novel targets. Novelty may require a lot of offline planning during get-set, customizing the command sequence for the particular situation.

|

|

The difficulty of throwing accurately is actually what

attracted me into postulating that spatiotemporal patterns were

cloned in the brain (to conclude the history in chapter 6), long

before any of the detailed physiological studies of throwing

performance. In the summer of 1980, I was sitting on a beach in

the San Juan Islands, looking out the Strait of Juan de Fuca

between Washington State and British Columbia, and throwing

stones at a rock that I had placed atop a log. I seldom hit it, so I

moved closer and finally starting hitting it more frequently. I got to thinking about why the task seemed so difficult. It was, I realized, that there was a launch window. If I let loose of my projectile too early, before the launch window was reached, the projectile arched too high and went too far. If I let loose too late, the path was too straight and hit below the target. If I moved closer, or used a larger target, the launch window lasted longer and so was easier to attain. Elementary physics. My motor neurons, I reflected, were too noisy — and so couldn’t settle down to controlling projectile release precisely enough to stay within the launch window. That’s where the story, hardly worth retelling, would surely have ended — except that I just happened to have some knowledge about how jittery the spinal motor neurons were. Indeed, I had done my Ph.D. thesis on that very subject 14 years earlier. So how wide was the launch window, in milliseconds? Does it match up with the noise levels of typical motor neurons? On the ferry ride home, I assumed it was just a matter of looking up the right formula in my old physics textbooks (I had, after all, been a physics major). But as soon as I thumbed through both my elementary and advanced mechanics texts, I realized that the variables of target size, height of release point above target, and the possible range of velocities meant that I was going to need to derive an appropriate equation from basic Newtonian principles. So I customized an equation, reveling in the fact that my rusty calculus still worked. And realizing that few things in biology could be similarly derived from basic principles, that particular histories were all important in a way that they weren’t in physics. For throwing at a rabbit-sized target (10 cm high, 20 cm deep) from about a car length away (4 meters), the launch windows averaged out at about 11 milliseconds wide. That was also about what I figured was the inherent noise in single motor neurons while in their self-paced mode. Yes, they matched — but I realized that something was very wrong. Most of us, I supposed, could hit that rabbit-sized target from two car lengths, or even three. The launch window for 8 meter throws worked out to 1.4 milliseconds. And there was no way that the motor neurons I knew so well were ever likely to attain that. I couldn’t consult the experts — I was the expert (at least on cat motor neurons, where the most detailed work had been done, and I knew that human forearm motor neurons weren’t much better from the neurologists’s EMG recordings).

That, too, should have been the end of the story. But I’d done a study only five years earlier on motor cortex neurons. And those cortical neurons were much noisier than spinal motor neurons under similar conditions, not quieter. No escape there. I’d been talking informally to neurophysiologists who worked in other brain centers, regarding the possibility of accurate “clocks,” and it didn’t look as if anywhere in the brain had superprecise neurons that ticked along with very little timing jitter. So I had a persisting theoretical puzzle to chew on: how did we get precision timing from relatively jittery neurons? Salvation arrived in the form of a reprint that crossed my desk shortly thereafter, delayed for about one year by the Italian postal system and slow boats. It in turn led me to a lovely paper by John Clay and Bob DeHaan in the Biophysical Journal, about chick heart cells in a culture dish. Each cell, when sitting in isolation, was beating irregularly. If they nudged two cells together, their beats would synchronize. If they kept adding cells, the jitter declined with each additional cell added to the cluster; what sounded as irregular as rain on the roof became as regular as a steadily dripping faucet. The interval’s coefficient of variation fell as the inverse square root of N. To halve the interbeat jitter, just quadruple the number of cells in the cluster. And it wasn’t just pacemaker cells but many kinds of relaxation oscillator, as J. T. Enright’s 1980 Science paper (subtitled “A reliable neuronal clock from unreliable components?”) soon made clear. All it took to throw with precision, I concluded, was lots of clones of the movement commands from the brain, all singing the same spatiotemporal pattern in a chorus. But the numbers of cells required were staggering: to double your throwing distance, while maintaining the same hit rate, required recruiting a chorus 64 times larger than the original one. Tripling the target distance took 729 times as many. Clearly, the four-fold increase in neocortical numbers during ape-to-human evolution wasn’t enough to handle this, as I’d hoped; those extra neurons would have to be temporarily borrowed somehow, perhaps in the way that the expert choir borrows the inexpert audience when singing the Hallelujah Chorus. Paradoxically, the Law of Large Numbers effectively said that the nonexperts could actually help improve performance, beyond that of the experts alone. It took me another decade to imagine a way of recruiting the larger chorus: that cloning mechanism for spatiotemporal patterns of Act I.

|

|

Apropos the advantages of non-experts, the composer Brian

Eno tells an interesting story about an orchestra whose members

were, quite intentionally, a mixture of the mildly experienced and

the self-selected musically naive. In recounting his experience

with the Portsmouth Symphonia, Eno said that one would occasionally hear some nimble playing emerge from the too-early, too-loud, off-tune chaos — which he called “classical music reduced

to some sort of statistical average.” Some amateur-night variation is very much what I imagine first happening in our premotor cortex as one “gets set” to throw at a novel target: that the variants gradually standardize to become the chorus. I imagine the practiced precision appearing in the several seconds that it takes hexagonal competition and error-correction to stabilize a widespread precision version of the most successful variant.

|

|

The CD player or jukebox on

automatic serves as a fancier-than-chimes example of a state machine for

spatiotemporal patterns, pulling in one

platter after another and playing them.

Like chimes on the quarter hour, this

suggests separate performances that

don’t overlap in time, in the manner of

the various instrument groups that

perform one after the other at the

beginning of A Young Person’s Guide to

the Orchestra.

One (likely oversimplified) model of schemas for sequence is quite similar. A hexagonal code for the calling sequence itself is likely a multilobed attractor, one that cycles through its various basins of attraction and, in the manner of an orchestra’s conductor, activates one link after another to outlying hexagonal territories that generate their own spatiotemporal patterns. But more typical sequencers are probably more like typical orchestral compositions, where the conductor brings in a new group to overlay the ongoing contributions of the earlier groups of instruments. In a canon, a melody repeats after a delay to overlap (as in “Row, Row, Row Your Boat”). There is, of course, no requirement for a conductor per se; string quartets manage without one, and complex patterns can arise from simple rules.

|

|

Brian Eno also notes that doing something well (“becoming a

pro”) can lead to a lack of alertness about interesting variations,

as if (in my terms) an attractor had created a compelling groove.

Fortunately, the neocortical Darwin Machine for orchestrating

movements promises to not only be quicker (seconds vs. lifetimes)

at producing pros, but also resettable so that amateurs alert to

interesting variations can still occur a bit later in the same work

space.

|

You are reading THE CEREBRAL CODE.

You are reading THE CEREBRAL CODE.

The paperback US edition |

Perhaps, you might

say (as I eventually did)

that the spinal motor

neurons are simply being

commanded to fire at the

right time by descending

commands from motor

cortex — that is, not

making the decisions

within those basins of attraction in spinal cord itself. The spinal

motor neurons might be noisy on their own but, with the brain

serving as a square-dance caller, they might be precise repeaters.

The precision might be upstream.

Perhaps, you might

say (as I eventually did)

that the spinal motor

neurons are simply being

commanded to fire at the

right time by descending

commands from motor

cortex — that is, not

making the decisions

within those basins of attraction in spinal cord itself. The spinal

motor neurons might be noisy on their own but, with the brain

serving as a square-dance caller, they might be precise repeaters.

The precision might be upstream. One can, with such circuit-riding

state machine analogies, easily imagine

how the compulsion to finish a song

line could arise in those crying

children. What’s being stirred up is

that central state machine with links to

the components. As each is activated,

feedback from it reinforces the forward motion of the state

machine attractor. The prompting voice advances it too, and the

omission of the prompt on the last word may not matter, once the

endogenous attractor has generated sufficient forward momentum.

One can, with such circuit-riding

state machine analogies, easily imagine

how the compulsion to finish a song

line could arise in those crying

children. What’s being stirred up is

that central state machine with links to

the components. As each is activated,

feedback from it reinforces the forward motion of the state

machine attractor. The prompting voice advances it too, and the

omission of the prompt on the last word may not matter, once the

endogenous attractor has generated sufficient forward momentum. A more serious problem for the neurological imagination is

finding the missing nouns in

that give sentence. Sentences too

are sequences at the performance level (though not in underlying structure, where trees and

boxes-within-boxes are better

analogies than paths). Think of

give starting up an attractor with

three lobes, each with reinforcing feedback from its linked

attractor basins at a distance. It

may be that this central attractor’s spatiotemporal pattern

undergoes a characteristic change when all side basins have full

feedback: it changes from unfulfilled to fulfilled and this is one of

the necessary conditions for you to judge the sentence as making

sense. So long as it remains in condition unfulfilled, you keep

trying out different candidates for those actor-recipient-object

nouns. Actually, I assume that many variations exist in parallel,

and that they compete for territory until one achieves the

especially powerful fulfilled spatiotemporal pattern — in effect,

that particular variant shouts “Bingo!” because all its slots are

legally filled. (I discuss a lingua ex machina more explicitly in

chapter 5 of How Brains Think).

A more serious problem for the neurological imagination is

finding the missing nouns in

that give sentence. Sentences too

are sequences at the performance level (though not in underlying structure, where trees and

boxes-within-boxes are better

analogies than paths). Think of

give starting up an attractor with

three lobes, each with reinforcing feedback from its linked

attractor basins at a distance. It

may be that this central attractor’s spatiotemporal pattern

undergoes a characteristic change when all side basins have full

feedback: it changes from unfulfilled to fulfilled and this is one of

the necessary conditions for you to judge the sentence as making

sense. So long as it remains in condition unfulfilled, you keep

trying out different candidates for those actor-recipient-object

nouns. Actually, I assume that many variations exist in parallel,

and that they compete for territory until one achieves the

especially powerful fulfilled spatiotemporal pattern — in effect,

that particular variant shouts “Bingo!” because all its slots are

legally filled. (I discuss a lingua ex machina more explicitly in

chapter 5 of How Brains Think).